From Cloud to Edge: Why Context-Aware AI Is the Future” – Insights from Mr. Deepak Kole

As artificial intelligence becomes deeply embedded into critical infrastructure, everyday devices and human decision-making, the limitations of cloud-centric AI models are becoming more evident. Relying on distant data centers to process sensor inputs or make predictions introduces latency, raises privacy concerns and increases operational fragility especially in scenarios requiring real-time, local adaptation.

Recent advancements in edge computing, coupled with optimized lightweight AI models, are enabling a new generation of context-aware AI systems. These systems are not only fast and responsive but also capable of understanding and adapting to their environments using localized data without constant cloud supervision.

From autonomous vehicles navigating chaotic urban environments to industrial robots responding to environmental changes in real time, edge AI is becoming indispensable. In sectors like healthcare, privacy mandates and the need for instant diagnostic support have accelerated the shift to localized, patient-side AI decision-making. The result is a smarter, faster and more resilient form of artificial intelligence that lives and thinks at the edge.

Deepak Kole is a technology professional with over 12 years of experience building scalable cloud and backend systems. A multiple award-winning engineer and keynote speaker at global AI conferences, he has authored papers on intelligent infrastructure and has been recognized as an IEEE Senior Member and Fellow of several research societies.

“We are at the threshold of a fundamental shift in how AI is deployed and delivered. The traditional approach centralized, cloud-first AI is starting to buckle under the pressure of modern demands: sub-second latency, data sovereignty, real-time adaptation and cost-effective scalability.

While I haven’t contributed directly to this article’s case studies, I recently addressed this very transition in my keynote at ICCIDC 2025, titled ‘From Centralized AI to Edge Intelligence’. My observations across industry use cases from healthcare to manufacturing clearly show that edge-based, context-aware AI isn’t just an optimization. It’s a necessity.

With advances in federated learning, sensor fusion, lightweight neural networks and on-device LLMs, we now have the tools to build AI systems that don’t just react they understand. They adapt to time of day, user intent, environmental changes and local constraints all without asking permission from the cloud.

This shift also improves privacy and reliability, as sensitive data remains local and systems don’t rely on persistent internet connectivity. I believe organizations that embrace edge-first AI architectures today will lead in delivering safer, smarter and more personalized experiences tomorrow.

Context Awareness Is the Differentiator in Edge AI

“Many still associate edge computing with simply reducing latency,” says Mr. Deepak Kole. “But that’s only part of the story. The real game-changer is enabling AI to adapt to its immediate physical environment to make sense of what’s happening around it, right here, right now.”

Deepak explains that while traditional AI systems operate on static inputs from centralized data streams, edge-based AI systems observe context firsthand. This includes motion, light, ambient noise, device location, user behavior patterns and time. “This context isn’t just supplementary it’s central to making the right decision, at the right time,” he adds.

Industries such as healthcare, smart cities, autonomous vehicles and industrial automation are especially ripe for context-aware AI. In each case, microseconds matter and understanding localized nuances can mean the difference between optimal performance and system failure.

Why Edge + Context = Real-Time Intelligence

Deploying AI to the edge is challenging but it’s the only way to enable true real-time intelligence.

“You can’t pause an autonomous vehicle to send sensor data to the cloud and wait for instructions,” Deepak explains. “The AI system needs to fuse sensor inputs, understand surroundings, detect anomalies and make decisions on the fly. The edge is not a convenience it’s a requirement.”

He points to the role of sensor fusion the merging of inputs from accelerometers, gyroscopes, temperature sensors, microphones and cameras to form a unified understanding of context. Add in on-device learning and these systems can adapt to new patterns without ever uploading sensitive data.

“This approach also drastically improves data privacy,” says Deepak. “Especially in healthcare or finance, you want inference to happen where the data lives, not in a distant data center. Edge AI lets us respect privacy without compromising intelligence.”

Navigating Technical and Organizational Hurdles

Adopting edge intelligence offers tangible value but it’s not without its own challenges. On the technical front, designing for real-time, local inference demands tight control over model size, power consumption, memory use and data routing.

“There’s a lot of nuances,” says Mr. Deepak Kole. “It’s not just about porting a cloud model to the edge. You must consider hardware limitations, bandwidth constraints and what context truly means at the device level. It requires rearchitecting intelligence not just relocating it.”

Beyond the technical hurdles, organizations often face structural and cultural resistance. Many still rely on legacy data flows and centralized control paradigms. A successful shift to edge AI demands cross-functional collaboration among engineering, security, compliance, product and executive teams.

“Start with visibility,” Deepak advises. “Know where your AI is deployed, where latency matters, where privacy is critical. That’s the foundation for a smart edge strategy.”

Strategic Alignment and Industry Standards

Deepak follows the progress of open-source frameworks and industry standards that are helping shape the future of edge computing such as MLPerf Tiny, ONNX Runtime and Edge AI benchmarking by MLCommons.

“These ecosystems are maturing quickly,” he notes. “But broad adoption still depends on shared understanding across disciplines. Leaders must embrace AI model agility the ability to swap, update and optimize AI models across heterogeneous hardware and operating environments.”

Strategic leadership is key. Edge intelligence won’t thrive in isolation it needs policy alignment, lifecycle planning and interoperability frameworks to ensure it scales securely and sustainably.

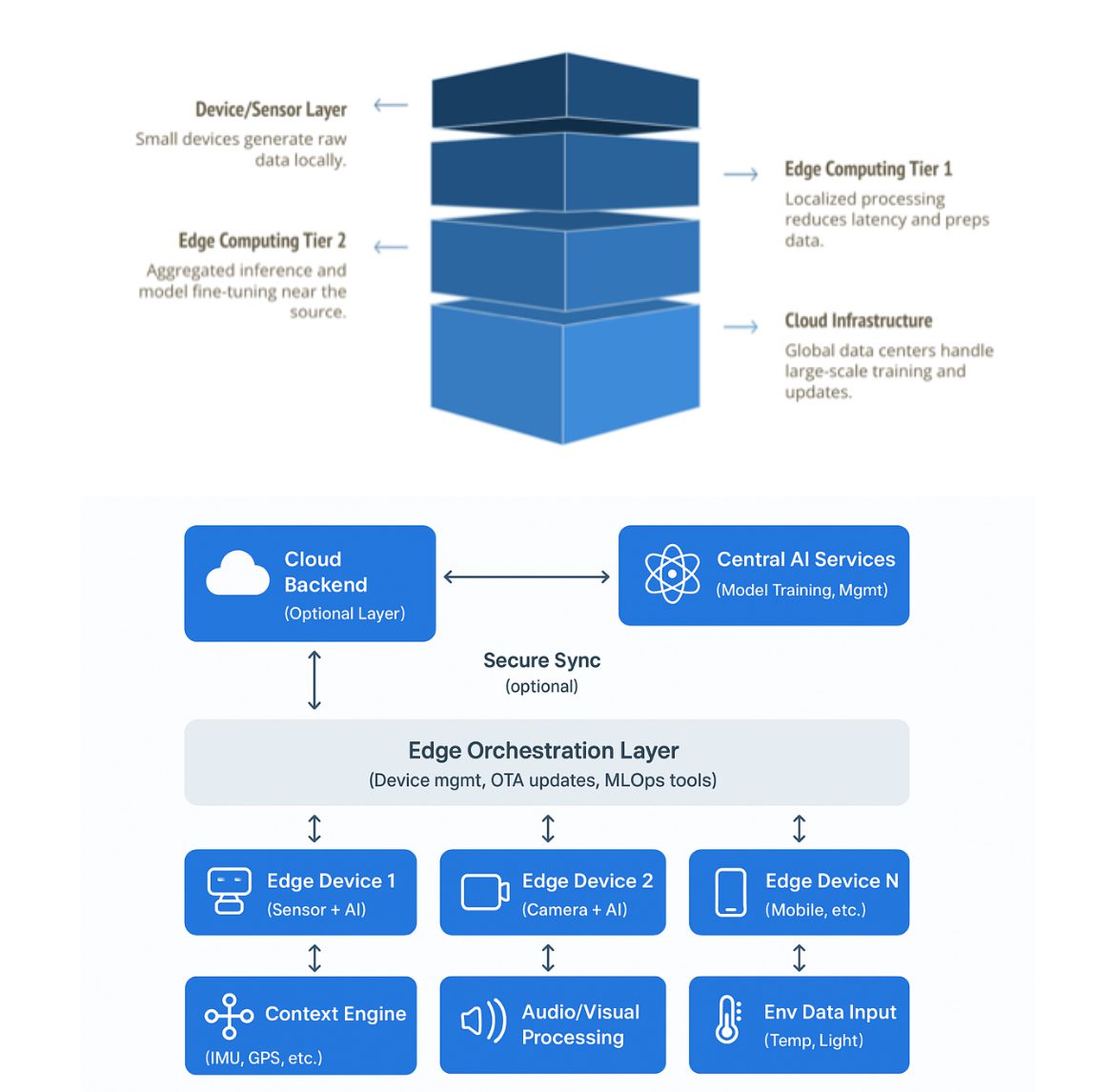

Visualizing the Shift to Context-Aware Edge Intelligence

A visual progression illustrating the transition from traditional cloud-first AI pipelines to real-time, context-aware edge systems. The architecture shows how cloud-based model training, edge orchestration and distributed device inference work together to create intelligent, adaptive systems.

How to Use This Roadmap

- Audit & Inventory: Identify workloads that depend heavily on low-latency responses or handle sensitive data.

- Pilot Edge Inference: Begin with hybrid deployments where edge devices perform inference but still sync with cloud models.

- Plan Migration: Define criteria such as cost thresholds, performance gains, or regulatory needs for transitioning more workloads to edge.

- Update Infrastructure Policies: Align security, observability and compliance tools with edge capabilities and limitations.

In short, this diagram acts as a strategic blueprint for moving from centralized AI to decentralized, responsive and privacy-preserving edge intelligence.

Strategic Guidance for Technology Leaders

As enterprises prepare for the shift toward edge AI, Mr. Deepak Kole offers a forward-thinking approach that developers, architects and CxOs can apply today:

- Identify low-latency or privacy-sensitive use cases: Focus on AI workloads where edge inference offers meaningful benefits.

- Assess your edge readiness: Evaluate infrastructure constraints like power, memory and connectivity at target deployment sites.

- Deploy pilot projects: Start with small-scale, real-world implementations to validate system performance and contextual responsiveness.

- Embrace model portability: Choose frameworks and tools that allow flexible deployment across CPUs, GPUs and NPUs.

- Foster internal education and alignment: Ensure leadership, engineering and compliance teams understand the value and roadmap of edge AI.

“Organizations that take action now won’t just optimize AI performance they’ll build future-ready platforms capable of intelligent autonomy,” Deepak says. “The edge isn’t just an endpoint. It’s an evolution.”

“The views and opinions expressed in this article are solely my own and do not necessarily reflect those of any affiliated organizations or entities.”